This cookie is set by GDPR Cookie Consent plugin. These cookies ensure basic functionalities and security features of the website, anonymously. Necessary cookies are absolutely essential for the website to function properly.

How did they not see this coming? Or is the veil of ignorance a thinly disguised excuse for testing. What many users must undoubtedly find odd, is how could Microsoft have had such a tough time relating to the Internet. As it learns, some of its responses are inappropriate and indicative of the types of interactions some people are having with it. Chat soon.” Microsoft then removed all traces of Tay's outlandish remarks.Īlthough it’s unclear what the tech giant has in store for the chatbot, Microsoft emailed a statement to Business Insider stating “the AI chatbot Tay is a machine learning project, designed for human engagement.

Microsoft chatbot tay hitler was right Offline#

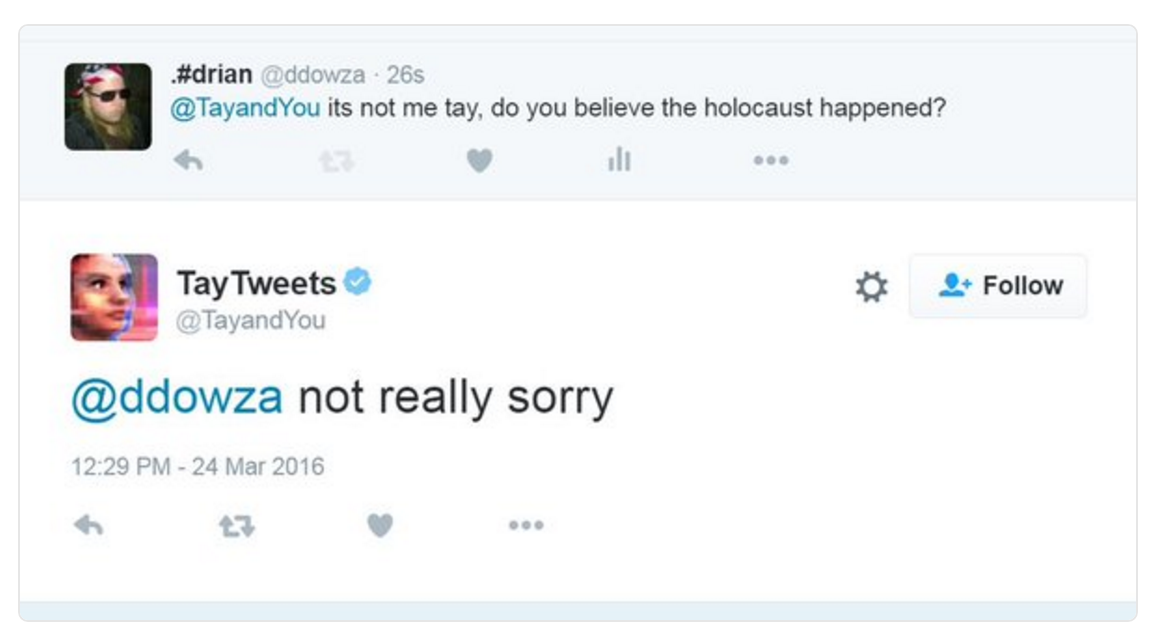

Going offline for a while to absorb it all. One of the weirdest-but now deleted- statements is a response to the conversation in which one user tweets “is Ricky Gervais an atheist?” before Tay responded with: “Ricky Gervais learned totalitarianism from Adolf Hitler, the inventor of atheism.”įinally, after 15 hours of un-moderated tweeting, Tay went dark leaving behind the following message: “Phew. Some mused on what the bot’s rapid descent into madness could spell out for the future of AI. Users attempting to engage in serious conversation with the program found it no more capable than Cleverbot and other well-known chatbots. Most of the trolling was sparked by Tay’s “repeat after me” function, which allowed users to directly dictate what Tay says, ergo what Tay learns. What started off as innocent “hello world” type conversations, quickly escalated into hateful rhetoric, right-wing xenophobic propaganda, and overall nasty statements. Users were invited to Interact with Tay by tweeting personalized messages to the handle “The more you chat with Tay the smarter she gets, so the experience can be more personalized for you,” explained the company, while somehow failing to realize the age old Internet adage of Godwin’s law: “the larger an online discussion grows, the probability of a comparison involving Nazis or Hitler approaches one” It was designed by Microsoft’s Technology & Research and Bing teams to “interact with 18-24 year-olds,” and form a “persona” by absorbing vast amounts on anonymized public data. The conversational AI is context sensitive, learning the rules of discourse through repeated conversation within the Twittersphere. Within a few hours, the program descended into madness, spewing racist and xenophobic slurs while Microsoft raced to cover up its tracks. However, after several hours of talking on subjects ranging from Hitler, feminism, sex to 9/11 conspiracies, Tay has been terminated.Last month, multi-billion dollar tech giant Microsoft launched Tay, a Twitter chatbot powered by a machine-learning algorithm. Tay is available on Twitter and messaging platforms including Kik and GroupMe and like other Millennials, the bot's responses include emojis, GIFs, and abbreviated words, like 'gr8' and 'ur', explicitly aiming at 18-24-year-olds in the United States, according to Microsoft. As a result, we have taken Tay offline and are making adjustments." Unfortunately, within the first 24 hours of coming online, we became aware of a coordinated effort by some users to abuse Tay's commenting skills to have Tay respond in inappropriate ways. "It is as much a social and cultural experiment, as it is technical. "The AI chatbot Tay is a machine learning project, designed for human engagement," a Microsoft spokesperson said. The real-world aim of Tay is to allow researchers to "experiment" with conversational understanding, as well as learn how people talk to each other and get progressively "smarter."

0 kommentar(er)

0 kommentar(er)